Artificial intelligence is hot due to the recent advancements in deep learning neural networks. Deep Learning is the use of artificial neural networks that contain more than one hidden layer . One fundamental principle of deep learning is to do away with hand-crafted feature engineering and to use raw features like plain images. Technical breakthrough technology in GPU computing power has boosted the application of advanced neural networks also known as Deep Learning. In the last few years, Deep Learning (DL) has been used widely to produce state of the art results in fields like speech recognition, computer vision etc.

In this article we’ll talk about how we design a deep learning solution given a problem.

Step 1 : Collect Data

One of the main reasons for high popularity of DL in the recent years stems from the fact that there is a lot of data available. Once we define our target tasks we must collect lots of training data which has a similar distribution as our target data. This is a basic but important step, because if the distribution isn’t similar, what the algorithm learns from training data (like driving a truck) won’t help it when it predicts on test data (this is more like driving an army tank :P) which is a different distribution. Also the data must be balanced across classes to avoid bias - let’s say we have classes A and B, if we have x examples of A and 100x examples of B then the model will be biased towards a particular class and hence affects classification accuracy.

A good read on how to acquire data: Data Acquisition

Step 2: Model Goals

Clearly define the goals for the model. Taking the example of driving, you would want a taxi driver to learn differently than a Formula 1 driver. If you teach them in the same way , although it’s still about driving they won’t perform well in their respective tasks. In deep learning, we define a loss function.

Loss functions represent the amount of inaccuracy in the prediction, for different problems we need to use different types of loss functions. Choosing the right one is very important. Generally we use Cross-Entropy loss for classification problems, Mean-Squared Error or Mean Absolute Error for regression problems. In keras you can find them here - losses keras

Below is an old article from 2007, but still worth a read if you have the patience Design of Loss Functions

Step 3: Build a simple model

It is well known that Deep Learning is a computationally expensive process, but it’s also electrically and financially expensive process with GPUs using up lot of power and not to mention it takes lot of money to rent/buy a good GPU. That’s Time+Money.

Many data science problems can be solved by using classic statistical Machine Learning techniques, deep learning in those cases would be too complex a representation and tends to overfit the data. If your problem has a chance of being solved by just choosing a few linear weights correctly, you may want to begin with a simple statistical model like logistic regression.

Start with a simple, shallow neural network and evaluate it’s performance before spending time and money on training a large deep network on an expensive GPU. Smaller models train quickly and help us get a good picture of the kind of accuracies we can expect and identify flaws in data/implementation if any. Don’t waste time tuning all the hyperparameters in this model as its only to get an understanding of the data and model performance on the task, use a simple optimizer like “Adam” or “SGD” with momentum.

We could go much deeper, on how to set up baselines but this is out of scope of this article! Refer to this article to learn more on Setting up a simple model .

Step 4: Real game begins.

We have our basics right, data ready (and hopefully cleaned), we’ve set up a simple model and a goal for our model. How do we make our model better? It’s not that easy to answer that question, but let’s go over some basic pointers so as to minimise our chances of being “very wrong”.

Let’s start by asking, what is the accuracy we want to achieve? For Deep-Learning tasks like vision or speech, matching human accuracy is something we should try to achieve. So that number X:human accuracy is our target goal. The training data is the one that we feed into the model to learn from, so that the model learns the representation of the data and will learn to classify new data. Now if the training error is too high, then it’s like a person who after hours and hours of training still doesn’t seem to have gained any skills. We respond to this just like how a real employer will do, get a “smarter” person for that job one who can learn better than the previous. In deep learning this is done by building a complex model, by either adding more layers or adding more neurons per layer in the current network. More neural gives the model the ability to learn more complex representation and develop a deeper understanding of the task.

Another possible solution is to change the learning algorithm itself that is instead of a feedforward neural network, a convolutional neural network would do well on images. All set and done, nice new model does well on training data.

But the real deal is performance on the testing dataset, test the model and find the test accuracy. If the test accuracy is much larger than the training accuracy, this is a problem of overfitting. The new “smarter” employee was just memorising the data and doing well on that, but given a new situation he is failing.

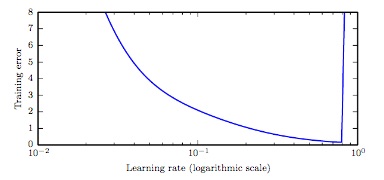

To tackle this problem, the best thing to do is gather more data and train the model. In many cases getting more data is really expensive. So this is the time to use regularisation, we have different types of regularisation like L1 , L2, Dropout to help the neural network generalise better. Also we try tuning the hyperparameters to achieve best model accuracy. Tune the learning rate as it’s the most important hyperparameter of all.

I won’t go too deep into hyper-parameter tuning and regularisation as it’s out of scope of this article. There are amazing research papers on these topics, I would highly encourage you to go through those.

If after all this you still get a bad test accuracy on the model, but high training accuracy go back and refer “collecting data” as it is highly likely that this is due to a mismatch in the distribution of training and testing data.

Happy (Deep) Learning!

Subscribe to our newsletter

Get the latest updates from our team delivered directly to your inbox.

Related Posts

Using AI to detect Facial Landmarks for improved accuracy

Using AI to detect Facial Landmarks for improved human face recognition accuracy. A complete tutorial on how we used AI to detect facial features of humans.

AI in 2024: What Actually Worked and What’s Coming Next

AI is everywhere, and it’s not just for the big players. Here are some of the most interesting AI projects that worked in 2024.

Skcript's Enterprise AI Manifesto

Our strict guidelines on how we build Enterprise AI products at Skcript.