Hyperledger Fabric is now getting very popular and the community is coming out with various deployment strategies for the production. One such implementation is the docker swarm method, mainly when you go with multi-node architecture which is likely to be the case for a proper blockchain network. In this article, we’ll be seeing how we can do the docker swarm setup for our Hyperledger Fabric network.

Prerequisite

Before proceeding into the article, I want to make sure that you are much aware of how hyperledger fabric works and what architecture is behind it to understand this workflow much better and with less confusion.

And make sure you use a ubuntu machine for deploying this, since it won’t work in mac as docker swarm works little different with MacOS.

- 3 Machines/VM with Ubuntu 16.04

- Docker

- Docker Compose

Pulling Docker Images for Hyperledger Fabric

In our tutorial we’re using Hyperledger Fabric 1.1 and the requirement. I never tested with 1.2 but it should work as expected. To make it more smooth, make sure you’re using 1.1 as the image. In order to do that pull the 1.1 tag.

docker pull hyperledger/fabric-tools:x86_64-1.1.0docker pull hyperledger/fabric-orderer:x86_64-1.1.0docker pull hyperledger/fabric-peer:x86_64-1.1.0docker pull hyperledger/fabric-javaenv:x86_64-1.1.0docker pull hyperledger/fabric-ccenv:x86_64-1.1.0docker pull hyperledger/fabric-ca:x86_64-1.1.0docker pull hyperledger/fabric-couchdb:x86_64-0.4.6Finally tag them as the latest images to make sure when docker-compose, runs them corresponding images are used.

docker tag hyperledger/fabric-ca:x86_64-1.1.0 hyperledger/fabric-ca:latestdocker tag hyperledger/fabric-tools:x86_64-1.1.0 hyperledger/fabric-tools:latestdocker tag hyperledger/fabric-orderer:x86_64-1.1.0 hyperledger/fabric-orderer:latestdocker tag hyperledger/fabric-peer:x86_64-1.1.0 hyperledger/fabric-peer:latestdocker tag hyperledger/fabric-javaenv:x86_64-1.1.0 hyperledger/fabric-javaenv:latestdocker tag hyperledger/fabric-ccenv:x86_64-1.1.0 hyperledger/fabric-ccenv:latestdocker tag hyperledger/fabric-couchdb:x86_64-0.4.6 hyperledger/fabric-couchdb:latestInfrastructure

In this article, we’ll be looking at a Fabric network with 3 organizations installed in 3 physical machines, and since it’s going to be a production deployment steps we’ll be using Kafka based ordering service with 3 orderers (one per each organization). The infrastructure will also include 1 Fabric CA per organization and the couchdb as world state in each and every peer.

We’ll be having one channel called mychannel and a chaincode named mychaincode installed in the channel.

What is Docker Swarm?

Docker swarm is an amazing tool by docker for clustering and scheduling the docker containers in your infrastructure. It helps you to orchestrate the network by having various disaster recovery mechanism for high availability and it looks to be very good for a blockchain network.

In a docker swarm you can create a cluster of nodes which will be able to communicate between then with help of a overlay docker network. The nodes are categorised into worker nodes and leader nodes. The leader nodes are the one which have control over the network and they are responsible for load balancing and container recovery in case of disaster. While the worker nodes are the nodes which will help with running the containers.

In our use case, we’ll be using 3 physical machines out of which 1 will be the leader and the other two will be worker nodes. So we assume that the leader node can be the node of the blockchain owner who has the rights over the private blockchain.

Challenges

When we try to deploy a fabric network in a docker swarm network, we’ll face the following issues or challenges.

- When we deploy set of containers, by default it pushes the containers to the nodes in the network in a random fashion. But in hyperledger fabric case we are going to map each and every organization to a specific node which might be owned by the corresponding organization. So we should be careful in handling node targets before deployments.

- The certificates for each and every container is usually mapped relative to the docker compose config YML file, but in docker swarm where we deploy and push to different servers we can’t use the relative path and can use only the absolute path. So in order to address this issue, we’ll be choosing a specific path in each VM or machine where the certs will be hosted and we’ll be using that in the docker compose files.

Getting Started

In order to make this deployment much more simpler, we’ve created scripts and configuration which we’ll use in this article’s step.

Creating VMs or Physical Machines.

As the first step, create 3 VMs in services like AWS, GCP, or setup the physical machines with Ubuntu 16.04. Once you’ve the machines ready, ssh into them and install the docker and docker compose.

Clone the repository.

As I mentioned earlier we’ve created a sample project which has the scripts and config for Hyperledger Fabric to run in 3 machines. You can clone that repo in all of your machines.

git clone https://github.com/skcript/hlf-docker-swarmOnce you clone it, you can see two folders, one has the sample chaincode (Fabcar) and the other which has the scripts and configuration for the docker swarm network. In this article, we’ll mostly use the scripts that is already written.

Understanding the configurations

Most of the configurations are similar to the single node deployment scripts. The key things to notice here are the docker compose files and the set of configuration that are modified for deploying the services in the swarm node.

Docker Compose Configurations for Swarm

When you docker swarm, we have lot of constraints in the compose files. Under each service block, we have to define the deploy configuration which has configurations like replication count, restart policy when the node crashes, and also the key important factor which says in which worker/manager node the service has to be deployed. This is important for a hyperledger fabric network since it invokes certs to be given to specific VM or physical machines in the network. We use swarm hostnames to specify it. The hostnames can be identified with help of following command

docker node lsdeploy: replicas: 1 restart_policy: condition: on-failure delay: 5s max_attempts: 3 placement: constraints: - node.hostname == <MACHINE_HOSTNAME>And when you define the network in each compose-file, you have to provide it as a overlay network.

networks: skcript: external: name: skcriptIn our network sample, you’ll be seeing this in all the docker compose files.

Mounting the volumes

In a usual case with single machine deployments, we mount the certs to provide it inside the container and most cases it’ll be a relative system path from the directory of compose files. But in case of swarm network we can use the relative paths since the containers are deployed from one manager node to any of the worker nodes, thus when the deployment in distributed system happens, the relative path will be meaningless.

Thus you’ve to use the absolute path only for any volume and make sure that they are present in the corresponding machines. For an instance in our orderer_org1 service we’ve mounted the following

volumes: - /var/mynetwork/certs/crypto-config/ordererOrganizations/example.com/users:/var/hyperledger/ - /var/mynetwork/certs/crypto-config/ordererOrganizations/example.com/orderers/orderer0.example.com/msp:/var/hyperledger/msp - /var/mynetwork/certs/crypto-config/ordererOrganizations/example.com/orderers/orderer0.example.com/tls:/var/hyperledger/tls - /var/mynetwork/certs/config/:/var/hyperledger/configAnd the host path is an absolute path point to folders inside /var/mynetwork/ .So make sure that these paths are available in the node org1 which is defined in the deployment policy.

Deployment

Now let’s get into the deployment process. Once you cloned the repository, open it in the terminal. Also make sure you’ve 3 machines ready for a multi host deployment.

cd hlf-docker-swarm/networkHere we’ve a set of predefined scripts that you have to run in order to setup the environment for docker swarm.

Step 1 : Setup Swarm Network

As the first step, we’ve to create a new swarm service.

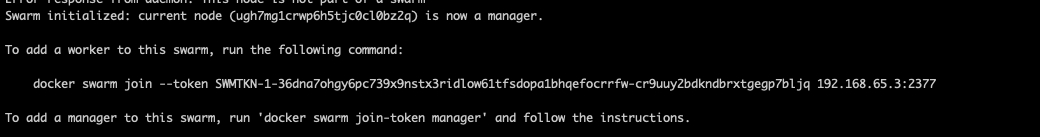

docker swarm leave -fdocker swarm initThe above commands will leave if the node joined any other docker swarm and then create a new swarm.

When you run it, you’ll receive a worker join token which you need to run in the other nodes in the network.

As mentioned above, you need to take this token and run it in other nodes that are to be joined in the network.

Once you make other nodes join, you can use the following commands to verify it.

docker node lsAnd you see all the nodes joined in the network along with their hostnames.

Alternatively, We also have a single script to run these above steps.

./setup_swarm.shStep 2: Create Overlay Network

Once you’ve created the swarm and joined the nodes, now we’ve to create a overlay network that will be used to communicate in the swarm. In this article, I’ll be naming the network name as Skcript. Run the following command to create a overlay network:

docker network create --driver overlay --subnet=10.200.1.0/24 --attachable skcriptOr, you can use my script which does the same.

./create_network.shNow if you do docker network ls you’ll be able to see the newly created overlay network

Step 3 : Node configuration and moving crypto certs

As we have the docker swarm and the network ready with all the nodes joined. It’s time to configure our docker compose files to make it deploy in the right nodes and also we’ve to move the certs to all the machine’s corresponding path.

Before node configuration, make sure you’ve the certs moved to the absolute path that is mentioned in the volumes. Clone the repo in all the machines and run the following command which will move the certs to the corresponding folder.

./move_crypto.shIn order to configure the node’s hostname, you’ve to edit the .env file that is located in the root of network folder.

nano .envUpdate the following variables to corresponding hostname of machines. You must reference the hostname of nodes from the above mentioned results.

ORG1_HOSTNAME=<ORG1 HOSTNAME>ORG2_HOSTNAME=<ORG1 HOSTNAME>ORG2_HOSTNAME=<ORG1 HOSTNAME>After editing, save it and close. Now you’ve to run our script to update this in all our compose files. In order to do that you can run the following command.

./populate_hostname.shThe above command will also map the certs for each and every Fabric CA. This must help you in the case of regeneration of certs.

Step 4 : Deploy the containers

Finally, all we have to do is to deploy the network to the docker swarm. I’ve created a single script for it.

./start_all.shThis command will run a set of commands, each will deploy the services from the compose files to the targeted node.

To give more clarity, we’re currently deploying the services/containers from our manager node to all the targeted nodes in the swarm network. Which means you don’t have to run any other script other that docker join and move crypto in other nodes in the network. Which makes the process quite easier.

Usually it’ll take some time based on the network speed and the machine’s performance. Once it’s been completed, in order, to make sure everything is fine, just run the following command and make sure nothing is returned.

docker service ls | grep "0/1"The above command basically checks if there is any failed containers. If in case you find any failed containers, run the following commands to debug what went wrong.

docker service ps <service id>I’ve listed the most common errors/challenges you’ll face in this setup down below. Please have a look at it in case if you are stuck somewhere.

Step 5 : Setting Up Channels & Chaincode

And in order to complete this we need to run the channels creations, joining and install and instantiating of chaincodes. This is more similar to the procedure we follow in single machine deployment.

In our sample, just run following commands to achieve them.

./scripts/create_channel.sh./scripts/install_chaincodes.shThis will make sure of Fabric CLI service to setup channels & chaincodes.

Once everything is done, you’ll have a multi host hyperledger fabric network. If you want to set up for more number of nodes, you just have to create more compose files, and corresponding organization configuration.

Common Errors & Mistakes

This following list is the curated items that one might face during this setup and it’s resolution.

- If you face “Invalid mount config” in you docker service logs, please check if you have the certs in all the machines at right location.

- CA Failed? It’s mostly because of the invalid certs provided to it. Try running populate_hostname.sh again and see.

- If you face network issues, make sure you ran create_network.sh before start_all.sh

This boilerplate is been used by various of our clients and also a lot of our production environments.

Subscribe to our newsletter

Get the latest updates from our team delivered directly to your inbox.

Related Posts

5 advantages of using Hyperledger Fabric for your Enterprise Blockchain

"Why should I use Hyperledger Fabric for my Enterprise Blockchain?", "What is the preferred way of implementing Hyperledger Fabric?". Everything answered.

Six ways the blockchain can be an advantage for Supply Chain

The blockchain for supply chain is going to be the standard in the industry in the next five years. We've already seen the adoption of the blockchain technology with our supply chain customer, and this article focuses on the advantages that the blockchain has on the supply chain industry.

6 Blockchain frameworks to build Enterprise Blockchain & how to choose them?

Let us help you list some of the open frameworks for blockchain (both public and private blockchain frameworks), that can help you develop your Enterprise Blockchain solution faster and better. Learn more.